From VR to real-world safety: Helping kids learn to cross the street

The Project

The Child Development Research Unit (CDRU) at the University of Guelph studies developmental issues related to child health. I'd worked with them before—building VR simulations to study child pedestrian behaviour—so when they set out to create a VR training game to help teach children (ages 6-9) how to cross the street safely, I joined a small team to help make it happen.

The goal was to build a finished system that could:

- Train kids in realistic suburban environments

- Track their performance and learning

- Support academic research on pedestrian safety

- Eventually be rolled out in classrooms

And the system also needed to be:

- Engaging enough to keep kids focused

- Simple enough to navigate with an Xbox controller

- Flexible enough for researchers to manage study participants, collect data, and recover from interruptions or technical issues

The project spanned about two years in total.

Team & Role

I wore a number of hats on this project—software development, UX design, user research, and telemetry design & data analysis—helping take the system from early concepts through to a working product. I was responsible for designing the interaction model, running user testing with child participants, and building the telemetry system to track and analyze performance data.

I worked alongside a small team: another developer/researcher, research assistants from the CDRU, the pediatric psychologists leading the lab, and a few other developers and artists from time to time.

Development

We developed the system in Python using the Vizard engine, building it from the ground up in collaboration with the pediatric psychologists.

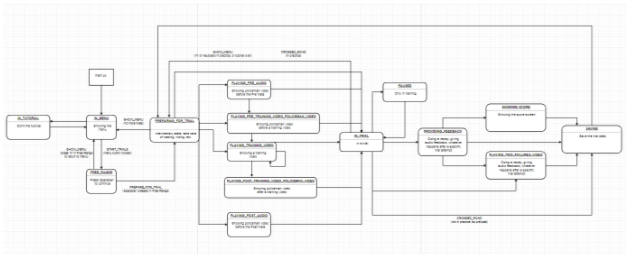

Working with them, we sketched out key scenarios and environments participants would encounter, creating flow diagrams to map out how training stages would progress. We worked together to prioritize essential features.

The system needed to support:

- Multiple environments simulating unsafe pedestrian conditions (e.g., curves in the road, parked cars, and other visual occlusions)

- Real-time detection of unsafe behaviour (e.g., not looking both ways, stepping into traffic too late)

- Timely, relevant feedback to help participants understand and correct their mistakes

- Assessment of skill progression, so participants could advance once they were competent with a concept

Given the complexity of the real-time feedback system, we knew we needed to test early with a functional prototype.

We broke development into modules, starting with a basic environment where cars would pass and the user could walk around. From there, we layered on functionality, testing internally at each step before rolling out our first training module with real-time feedback.

We did some usability testing and iterated on each module before moving on to the next.

Along the way, we also built support tools to streamline content creation. One was a cutscene builder—an application that allowed the psychologists to place cars, camera movements, and an avatar on a 2D canvas, then translating it into an in-game sequence that we could play for a user. This was the basis of the feedback that participants would see.

Usability Testing & Iteration

Once we had a few training modules ready, we began user testing with children aged 6-9—our target audience. The CDRU had an existing panel of child participants, which made recruitment easier.

After each round of usability testing, we identified the most common and significant issues, brainstormed solutions, and worked with the psychologists to determine the best course of action. I then worked with the other developers to implement changes before running another round of testing.

Through this process, we solved a number of key usability challenges, detailed below.

Task Complexity

Some training tasks were too complex for the age group, and many participants struggled to understand or complete them.

Solution:

- We broke training into smaller, more focused sections, each teaching only one concept at a time.

- We adjusted replay camera angles to make each action clearer.

- We simplified training scripts, including language adjustments. For example: Some children had trouble differentiating “left” and “right”, so we found alternative ways to guide them.

Impact:

Our changes significantly improved training completion rates.

Engagement

Some children lost interest before completing the training, reducing its effectiveness.

Solution:

- We added animations to the instructions and feedback, featuring a cartoon police officer.

- We introduced a star rating system with bonuses for strong performance.

- We shortened instructions and streamlined transitions between training stages.

Impact:

- Time needed to complete the training was reduced.

- Engagement improved significantly.

- Completion rates further increased.

Controls

Some participants found the controls confusing or difficult to use, particularly in combination with the VR headset.

Solution:

- We tested different control schemes to find one that felt more intuitive.

- We created an interactive tutorial to establish a baseline for control competence before training began.

Impact:

This substantially reduced control-related errors and frustration.

Motion Sickness

VR can cause motion sickness in some people. This was a major blocker for any participants impacted. Our system initially had two major triggers:

- Technical performance issues - Low frame rates and input lag can increase nausea.

- Sensory conflict in replays - The feedback system required showing participants replays and instructions with dynamic camera movements while they were sitting still, which could create sensory conflict.

Solution:

- We improved performance efficiency to maintain a higher frame rate, including new 3D models and lighting.

- We redesigned how the replays worked. Instead of shifting the participant’s view, we gently reset them to a starting position and placed a large projected screen in front of them in the virtual world, much like a movie theatre. Replays played on this screen instead, avoiding camera movement issues.

Impact:

- Motion sickness was dramatically reduced.

- Training completion increased to nearly 100%.

(One new issue we ran into after implementing the movie screen solution was that some participants ignored it, missing important instructions. This was largely solved by improving feedback and engagement, as described earlier.)

Telemetry & Behavioural Metrics

A key part of the system was telemetry data—this was how we could quantify user behaviour and prove whether the training interventions were effective.

To define what needed tracking, I worked closely with the pediatric psychologists leading the study. They had high-level research questions, like:

- How fast were the participants moving?

- Was it a safe road entry?

- Did they check for oncoming traffic before stepping into the road?

- Did they pay attention to traffic while crossing?

My job was to translate these behavioural questions into concrete, measurable metrics that we could track in the program.

Raw data → Meaningful insights

We had access to the following spatial data, at a 60Hz sampling rate:

- Participant location and viewing angle

- Car positions (moving and parked)

- Road boundaries and locations of visual obstructions

This gave us raw data, but we needed to extract meaning from it. For example, defining “paying attention to traffic” required translating head orientation and timing data into a clear behavioural measure.

Once each metric was defined, I:

- Implemented it in code and tested its accuracy.

- Documented it—how it was calculated, expected output formats, and how it should be interpreted.

- Created visualizations to help the psychologists interpret the data, when appropriate.

One tricky problem was that since frame rate and update delays while the program was running could cause small spikes in velocity measurements, so I also applied signal processing techniques, such as Savitzky-Golay filtering, to smooth the data somewhat and ensure reliable insights.

Outcome & Impact

The system, later officially called Safe Peds, proved to be highly effective in teaching safe pedestrian behaviours to children. Testing showed marked improvements in how children assessed traffic, checked for danger, and crossed streets safely after training.

The impact extended beyond the lab:

- The project’s findings were published in major academic journals, including the Journal of Pediatric Psychology.

- The research contributed to multiple PhD dissertations and was presented at international conferences.

- The project was covered by national news outlets, including The Toronto Star and CityNews.

- The system has since been rolled out in schools across Ontario, where it continues to be used as a training tool for pedestrian safety education.

This was a project that had a real-world impact, using VR and behavioural research to help make streets safer for kids. I can't help but think about it any time I step into the street.